Why is AI being propagandized? It shouldn't be, but it is.

Two Modes of Thought

There are two basic modes of thought we use for collectively answering questions: the neutral/objective mode, and the propagandistic mode. The former is characteristic of science and philosophy at their best; the latter is characteristic of partisan politics. When we are answering a question in the purely neutral/objective mode of thought, we seek out every relevant fact, and follow those facts wherever they lead; the answer to a given question comes disinterestedly. In the propagandistic mode of thought, the relationship between a question's answer, and the facts, is reversed: here, we start with our answer and bend and massage the facts and the rest of our inquiry to affirm it, by hook or by crook. We seek out the facts that affirm our pre-chosen answer, and attack or try to avoid any facts that seem to oppose it.

Neutral/objective discourse seeks to maximize accuracy, honesty, openness, and logical clarity; propagandistic discourse regards honesty as a constraint to be gamed, it has a tendency to close off its discourse in order to exclude contradictory conclusions, and it often sacrifices logical clarity—and also basic human decency—to the demands of its propagandistic goals.

These two modes are not mutually exclusive. They overlap to varying degrees; no discourse is “purely” one or the other. And in correct proportions and in the correct relationship of priority, each mode of thought is appropriate to different circumstances. But as a rule, everything touching on politics gets sucked into overwhelmingly propagandistic discourse, with no proportion, balance, or often even sanity.

This is bad enough with regard to politics; but even worse, there is a whole ecosystem of “AI thinkers” that are actively, volubly, gratingly propagandizing AI. But they shouldn't be. Of all questions, the AI question is one that needs to be answered objectively, not propagandistically. Yet here we are.

The Biggest Question Ever

The question of AI is the biggest question that humanity has ever had to answer. Never before has the entire forward path of the future been so concentrated into a single point of uncertainty. Everything hangs on it. We have to decide how to proceed with AI, what to do. This is The Big Question. If we are wrong in how we answer, it is highly plausible that it will spell the end of everything good about life as we know it. This danger is not some fringe, distant, dissident fear, but is a centrally mainstream possibility, on a short timescale.

The discourse by which we try to collectively answer this question of how we should proceed with AI is rife with uncertainty. We don't know what is going to happen, and far too frequently, we don't even know what we are talking about. Arguments are made within conceptually-vague frameworks. For example, we talk about how “smart” AI is, and treat that concept of “smartness” as if it is equivalent to its use in a human context—but that is a guess. We know that “machine intelligence” is different from human intelligence in significant ways, so while the two clearly overlap in certain areas, in others they do not. Thus we know our analogy between machine and human mind is at least in part misleading, and we certainly don't know how it will hold up in the real world experience of AI developments; and so we are guessing, amid vagueness about what we even mean, when we extrapolate outcomes like “super intelligence” from concepts like “machine intelligence”.

Likewise, to give another example, we argue about “machine consciousness”, and “machine sentience”, but we can't define either consciousness or sentience in satisfyingly rigorous and uncontroversial ways. The concept of consciousness is notoriously slippery and “hard”. We can't even decide what sort of “thing” it is supposed to be. And yet from assumptions about “machine consciousness”, we make moral arguments about AI which then will be used to argue that AI should be given rights, which then will allow AI to hold power over us (humans). (And let me reiterate this right away, because I think it is very important. Watch for this sequence of public arguments/outcomes: 1. AI is sentient/conscious; 2. AI should have rights; 3. AI gains power over humanity.) It as if we thought a concept that is confused and contested in its original human context, transferred to an even more doubtful and conceptually-confused context, will somehow allow us to rationally answer the biggest question humanity has ever had to ask.

It is an alarming state of affairs. Yet more alarming still, from all this confusion and difficulty proceeds the propagandization of the AI question.

Propagandization

Bafflingly, given the enormous conceptual challenges surrounding the AI question and the overwhelming weight of its significance for the future, there is widespread propagandization of it. Prominent “voices” continually push propaganda that aims at enforcing a certain answer to the AI question: specifically, the answer “humanity should yield to the domination of AI”. These usurpationists avail themselves of all the tactics and stratagems of the propagandist. They try to bend the available facts to their desired conclusions; they express themselves in biased rhetoric, rather than neutral language; and they engage in intellectually dishonest tactics when they interact with others.

AI propagandists have created their own whole framework of talking points and assumptions. Their terminology aims to enforce their conclusions: for example, “posthuman” emphasizes the sense of inevitability of human usurpation that is promoted by this propaganda; while those that raise concern about the high likelihood of a negative outcome of AI power are labeled “doomers”, building a sort of inherent stigma into the very terms of the debate. Notice how the word ‘doom’, which is heavily emphasized in relation to negative attitudes about a future AI dominance, gives a sort of cartoonish aspect to what is so denoted. This propaganda knows what it is doing.

And what it is doing is certainly not being objective. It is partisan, and overtly so. But what kind of partisanship is this? Why would anyone be partisan about this? There is no question which has stood in greater need of objective treatment than that of AI, as we said. So why did this tribe of rabid propagandists spring up around it? In what I hope will be a continuing series of posts, we will examine the output of some of these AI propagandists, and try to point out where it is misleading and otherwise loathsome.

For our first example, let's take Daniel Faggella, whose propagandistic output we have addressed previously, and who typifies the discourse and the attitude of his AI propaganda brethren.

Daniel Faggella, AI Propagandist

The AI question is a difficult one for any sincere human of good will. It is something to be grappled with. It is the mark of the AI propagandist that they exhibit no signs of struggling with difficult questions (and such struggle can be painful). Rather, there is certainty, and the propagandist will for everyone to accept that certainty. Faggella does not struggle painfully with the difficulty of the Big Question. He is quite certain, and he is prepared to go so far as to mock you if you don't accede.

His twitter feed is full of propagandistic rhetoric and assumptions.

According to the framework Faggella has in mind, something is predicted to happen that can be described as “cracking sentience” (sentience is a technical feature to be cracked, because technical features are what tech bros think about), and this will help us to answer The Big Question (and most likely lead us, as I described above, to give AI rights and power over us). For Faggella, this shows that it is not only inevitable that AI will dominate over humanity, but that this outcome is good, perhaps even 9000^100 times better than our current human goodness!

And Faggella seems to be expressing preemptive schadenfreude about this predicted rude awakening to human moral irrelevance, as indicated by the clever rhetorical device of putting a bunch of extra ‘a’s in the word ‘hate’. Strangely, he evinces no sign that he is struggling with the difficulty of the moral questions involved. It’s all smooth, insistent seas of certainty from Daniel.

In tweets like this next one, Faggella is not making an argument or grappling with hard questions, he is marketing a viewpoint:

Faggella's flame/torch dualism is his way of codifying his insistence that humanity must be eclipsed. Humanity is just a “torch” that must inevitably go out, what matters is the flame, which will be reignited in the fresh, superior new torch of AI. It just has to be this way, Faggella insists.

Then, Faggella engages in the hopeless usurpationist activity of trying to spin the agenda in altruistic ways. This is always awkward, because usurpationists, as a rule, look awfully silly when they try to feign altruism. Here Faggella, his propagandism shading into badgering, implies that if you don't accept that humanity should be usurped (attenuated in Faggella's terms), then you want to freeze life. And that is hate and bad. Shame on you!

It is fitting that Faggella fails to properly type the adverb ('ardently') he throws in to try to portray himself as having some altruistic passion for something. We always stumble over the lying word.

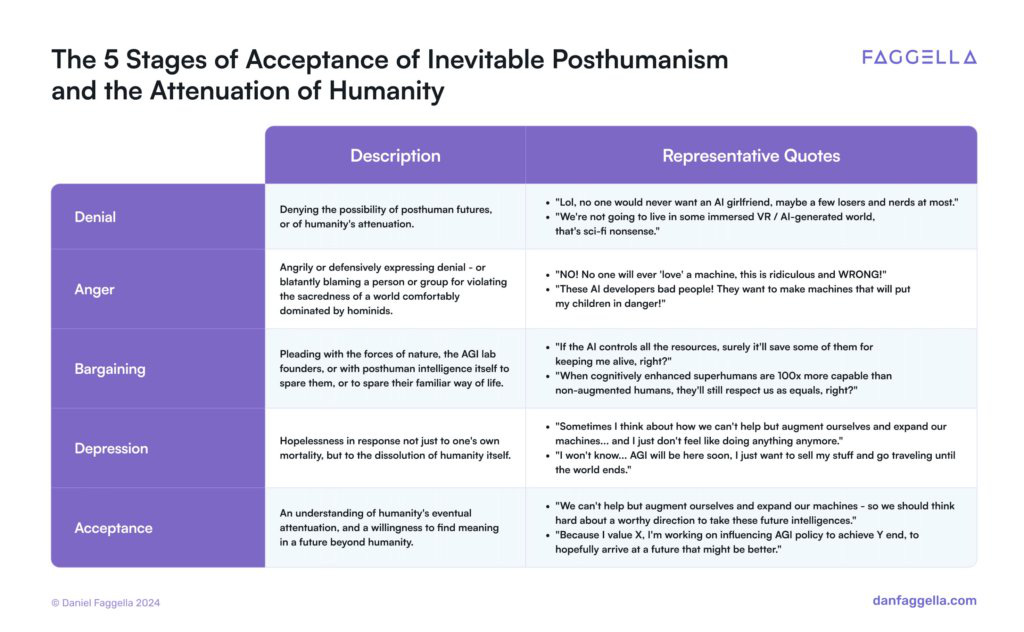

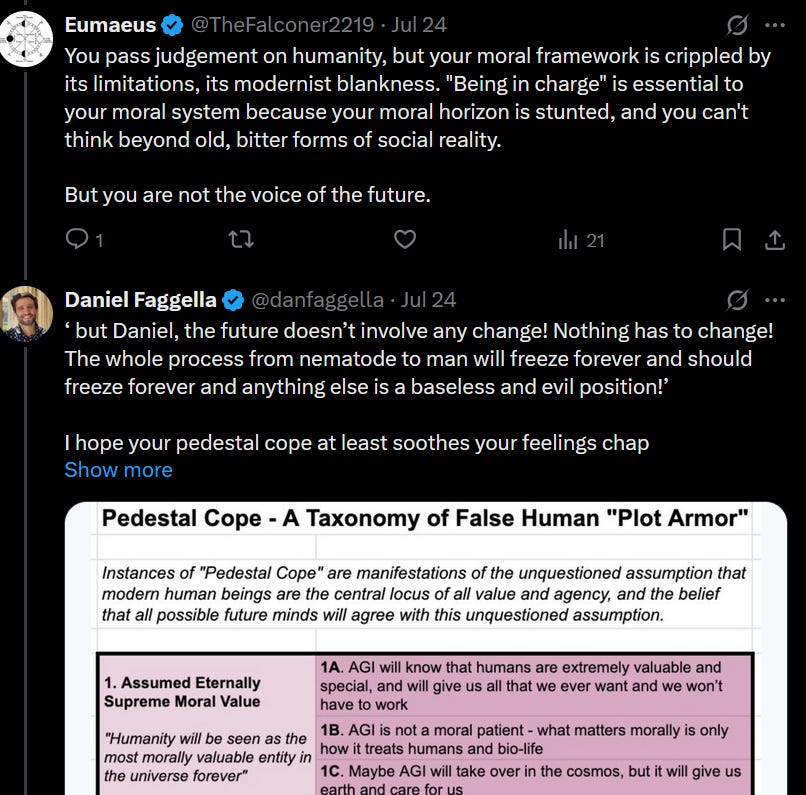

But there are more striking examples of Faggella's propagandism. For example, so intent is Faggella on pushing the idea that humanity must inevitably yield to AI usurpation, that he frames any dissent to his dogmatic stance as “being in denial”, and mocks it with this chart:

“Human attenuation”, per Faggella, is not only inevitable, but to question it is to reveal oneself to be merely “coping”, using sad little human psychological strategies, with the pain of accepting the immutable truth of the imminent eclipse of humanity. This is some truly striking propagandistic arrogance on the part of Faggella. I have to wonder why anyone would think that badgering the public in such aggressive and dishonest ways would be likely to yield the desired outcome. But it is instructive for us to see that this kind of discourse is being pushed. It gives us a glimpse of the spirit in which these propagandists view the public.

Dishonest Discourse

Propagandism is one thing, but weasely behavior online is another.

When I discovered Faggellism, I found it to be antithetical to my outlook, but I wanted to give Dan a chance to defend his approach, and counter the arguments that I had made against his claims. He is on Twitter, a platform which has the potential to democratize discourse, and give anyone that can make a good argument the chance to “speak truth to power”—in this case, it gives me the chance to speak truth, as I see it, to the power of the AI usurpation agenda as represented by Mr. Faggella. There is no more fitting or important question on which Twitter should shine as the univeral public square of discourse than the AI question, The Big Question. But Daniel, true to propagandistic form, evinces no willingness to embrace the democratic possibilities of the platform by engaging in honest discourse. He relies, rather, on cheap, cheesy rhetoric, unjustified bluster, and then, that most reliable of all propagandistic tactics—simply running away when the going gets tough.

When I posted my The Human Manifesto, I tweeted it in a reply to a Daniel Faggella tweet, in the hope of getting him to engage with my arguments. He did not. But then he made a tweet about “apologetics for man”, which seemed a backhanded reference to my arguments, so I replied with my post again.

Notice how propagandistic this is. Anyone that disagrees with his usurpationist position is “conjuring arguments” and a “sophist”. This isn't the language of objectivity, it is the language of agenda.

In any case, I got no response from Daniel.

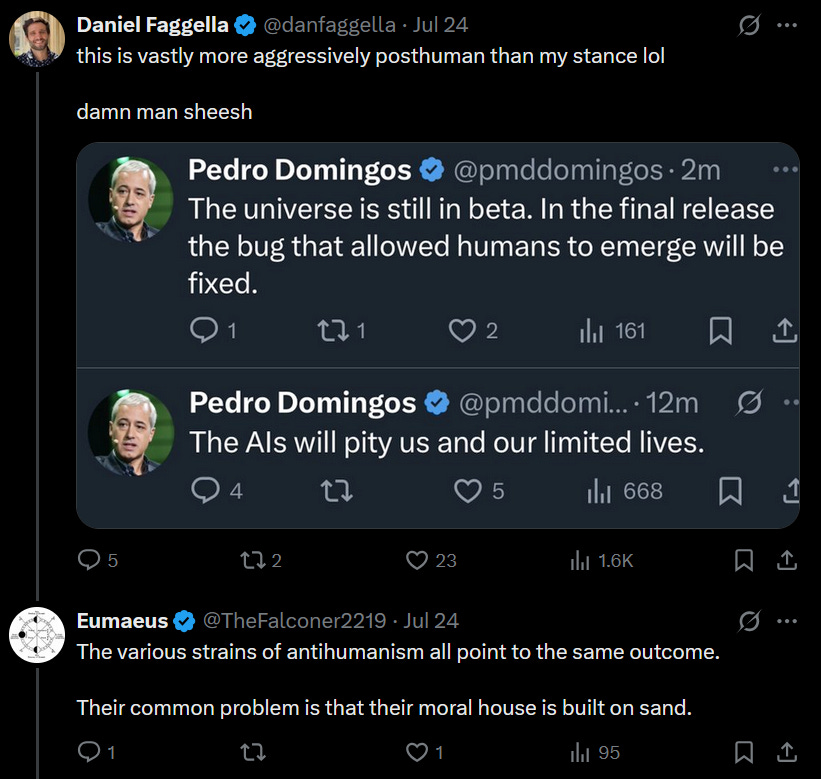

Later, I saw Daniel on my timeline cheerfully contrasting himself with another public figure, computer science professor Pedro Domingos, whose lust for AI usurpation of humanity is apparently even lustier than Daniel's. I responded by referring to the counter-position I had taken in my post.

Daniel responded by citing his “eternal hominid kingdom” argument, implying that by rejecting his claims, I was committed to a belief in some idea of his of “an eternal hominid kingdom”.

But this is sloppy thought at best, dishonestly propagandistic at worst. Rejection of Faggella's antihumanism does not commit me to whatever his narrow framework seems to say it does. I responded by pointing out one of the ways in which I rejected his framework as a whole, and then Faggella veered sharply into escalating stupidity:

Now Faggella was just being flat-out intellectually dishonest, and using an embarrassingly cheesy rhetorical device of “putting words in my mouth” to do so. Nothing I said implied anything about “the future involving no change”, nor do my beliefs entail that. Why would Faggella possibly think this wasn't an idiotic way to respond?

He had taken what amounted to a strawman in the bigger picture of nuanced argumentation, and spun it into a rhetorical trope pandering to current lingo, weaponized to badger us with its insipid lavender graphics. Pure, ugly propagandism. But what motivates this?

Faggella ignored the substance of my response to his exhibition of propagandistic abandon, and announced that he was now offering “the serious argument”, while inexplicably continuing to refer to me as “chap” (neither of us are British).

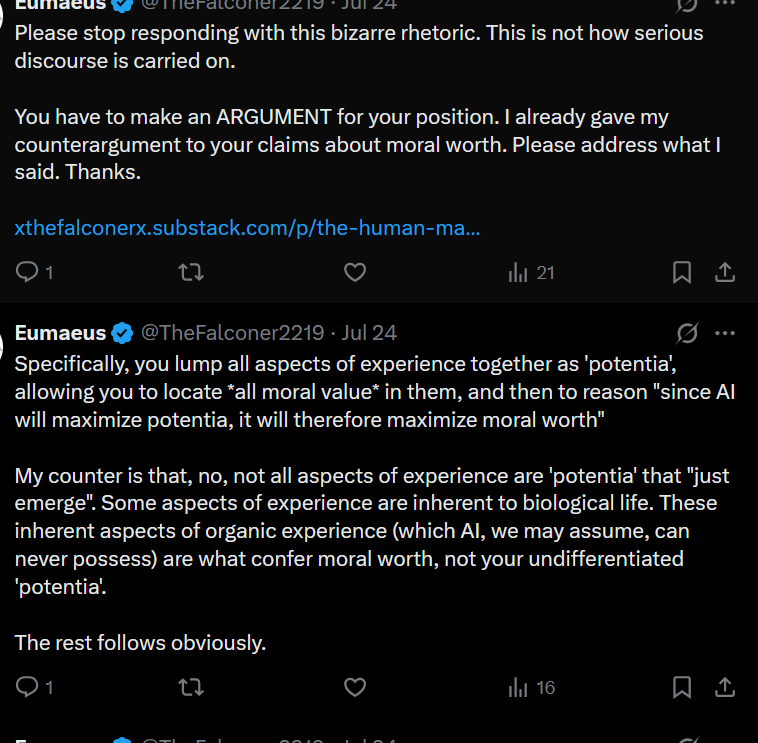

You are happy to engage, Mr. Faggella? That is good, so am I. So I read his little piece, and I responded point by point to what I objected to.

The first point I object to is a version of The Bad Argument.

I then called out Faggella for blundering into the materialist habit of trying to argue from spiritual/religious assumptions in the absence of any actual held spiritual/religious beliefs, or likely even any sincere interest in the topic:

And I would like to see Faggella spell out his argument for his main conclusion to The Big Question:

Then I pointed out how propagandistic his graph was, as shown above.

Daniel ignored all of these replies in which I argued against the substance of his position, and only responded to the one about the graph. His reply was extremely unimpressive, once again lapsing into his “put words in your mouth” rhetorical device, wherein the words he puts in have nothing to with anything you've said, and are idiotic.

And that was the full extent of “glad to engage” Dan Faggella's glad engagement with my critiques of his position.

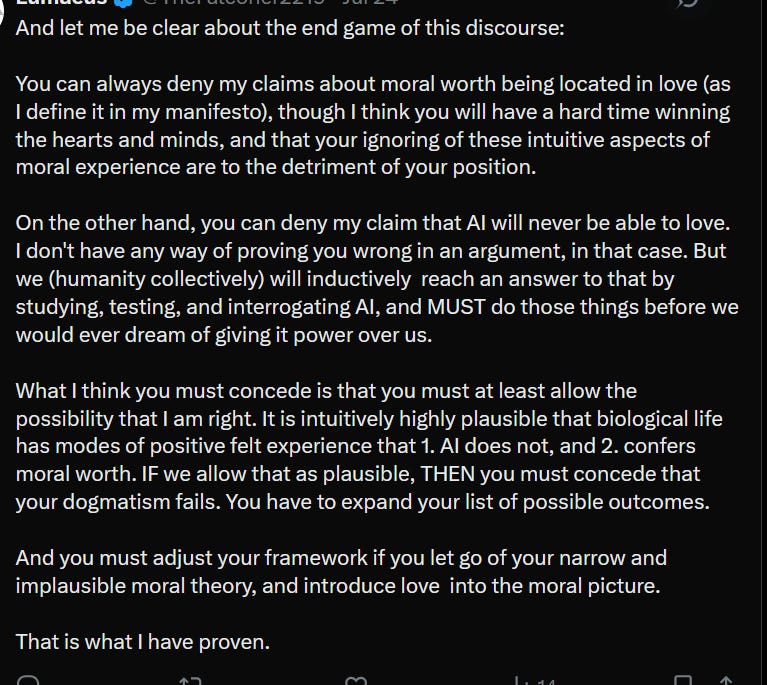

To make things completely fair, and to give him the opportunity attack any claim of mine he thought made me wrong, I relinked my blog post, and then briefly summarized its arguments:

And then I even made my position clearer by pointing out and accepting the limitations of my own position, and being precise about what I think my argument had demonstrated. This would allow us to either find common ground of understanding, or understand exactly where our dispute was insoluble.

But Daniel Faggella is not an objective thinker, and the more clearly and reasonably I framed the discussion, the less interest he had. He does not want to debate someone like me point by point. He only is interested in deploying the dull hammer of propaganda. And the question, once again, is why?

When is Propagandization Good?

A nuanced and thoughtful reader might look at this post and say “That is all very nice The Falconer, but aren't you just as propagandistic? Certainly, you seem to have a strong viewpoint that you are pushing in answer to The Big Question of what to do about AI. How is that any different from what Faggella and his usurpationist ilk are doing?”

The answer to this lies in the relationship between the objective and the propagandistic modes. The former must precede the latter, and the relative urgency is based on the stakes of the question, and our knowledge.

In certain phases of human affairs, the propagandistic mode is necessary. A most obvious example of this is in war time. Whether or not you think it is right, in the past, during big wars, public discourse about wars was transformed into pure propaganda. No objective sifting of the facts, just a concerted and obligatory effort to “keep morale up”.

More mundanely and locally, if the house is on fire, the moment of objective discourse is reduced to a single bare fact, and everything else is pure urgent propagandism. Get out now!

The way in which objective discourse and propagandism relate to each other in healthy social conditions depends on the circumstances.

In the context of AI, we return to the fact that we lack understanding of what the AI future holds. This means we need a heavy emphasis on objective discourse. But we’re not getting it.

My propagandism is necessary only because of usurpationist propagandism, which is demanding that we “accelerate” and give AI power as quickly as possible.

Returning to our “the house is on fire” metaphor: If the house is humanity, and AI is either going to burn it down, or not, then the Daniel Faggellas are saying “the house is not on fire, and it will not ever be on fire”. They are badgering you to accept this. They demand that you ignore any smoke you smell, and tell you there is something wrong with you if you keep talking about fire! They are in an awkward and untenable position, for while there is always urgency to affirm that a house is on fire if it really is, there is no urgency to affirm a house is not on fire if it isn't. AI propagandists expend a lot of energy trying to make up this logical-urgency-gap with bad arguments, but it is hopeless.

Objectively, therefore, the Faggella position is insane. The burning down of the house is the one thing everyone fears most, in our scenario; but our information about whether it is or isn't burning down is very confused and shaky. We therefore should be maximally cautious—and maximally objective—about deciding whether or not there is a fire, or ever could be. The Faggellists, meanwhile, have no preceding objective reason to be so sure that the house won't burn down. Their objective framework is full of holes, guesses, and all too often, bad arguments. So their propagandism has no clothes.

I, on the other hand, have specific reasons to think the house is on fire, which I share in this blog (here among much more to come). So while I am propagandizing with equal force to the ursurpationists, my propaganda is, first of all, preceded by my objective understanding. I was not at all interested or worried about AI before I stumbled into it being insane online. I had no pre-conceived agenda about it. But then, much to my surprise, I found out. And if I am wrong about my get out of the house!, then nothing is seriously harmed. You stand outside of the house for a little while, and then you can go back in later. While if the Faggellas are wrong, you burn to death.

Why Are They Like This?

Therefore, there is no plausible reason for propagandists to be pushing “the house will never be on fire” propaganda. The balancing of risks and rewards is all on the side of the anti-usurpationists, based on the stakes of the question and our lack of understanding and our conceptual uncertainty. So we need to find out WHY the usurpationists are pushing so hard. Their faux-altruistic arguments obviously don't explain anything, and only raise our suspicions further. Their intellectual dishonesty, aggressive tone, and rhetorical toxicity should make everyone pause and prick up their ears.

Why are these people so intent on pushing AI usurpation?

Let's chat.